AI: Are You an Accelerationist or a Decelerationist?

Randy Sukow

|

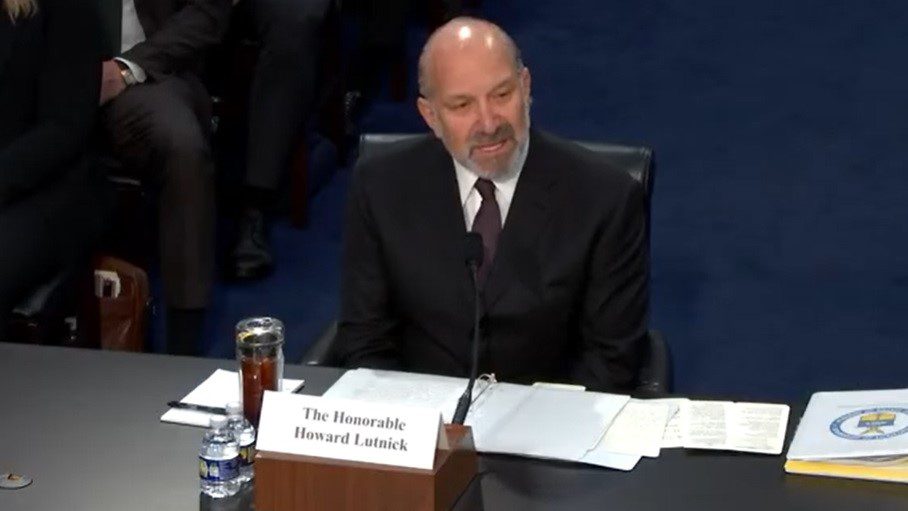

The world is not quite sure what to make of artificial intelligence (AI). Should industry and government embrace it as a technological leap forward or fear the potential risks it presents? The National Telecommunications and Information Administration (NTIA) yesterday released a policy report giving the Biden Administration’s assessment of the situation and eight lengthy policy recommendations.

The recommendations follow the lead set by a November 2023 presidential executive order, which declares that the United States “should be a global leader in AI development and adoption” but at the same time directs 50 government agencies to consider preemptive regulatory action in more than 100 different policy areas. The administration is concerned about everything from cybersecurity and physical infrastructure effects to potential labor force disruptions to consumer protection to potential racial bias in AI systems.

“Alongside their transformative potential for good, AI systems also pose risks of harm,” according to the executive summary of the NTIA report. “These risks include inaccurate or false outputs; unlawful discriminatory algorithmic decision making; destruction of jobs and the dignity of work; and compromised privacy, safety, and security. Given their influence and ubiquity, these systems must be subject to security and operational mechanisms that mitigate risk and warrant stakeholder trust that they will not cause harm.”

So, which is it? Are powerful AI algorithms, especially generative AI systems such as ChatGPT, which generate original text, images, audio and video, something to encourage? Or should the government regulate them into submission before the next generation of algorithms reaches the market? The administration appears to be looking for a balance.

Drew Clark, editor and publisher of Broadband Breakfast, simplified the issue in a webcast he moderated following the NTIA report’s release. Opinions on AI appear to fall into two camps, he said. The “accelerationists” wish to develop AI as rapidly as possible to take advantage of the many potential economic and public welfare benefits, as well as maintain an edge in global trade. The “decelerationists” seek “guardrails” around the many potential harms.

“I think the number one priority for Congress for the administration should be on increasing AI use,” said Daniel Castro, VP of the Information Technology and Innovation Foundation, a panelist on the Broadband Breakfast webcast. “What [China] wants to do for the next year is use AI across every part of their economy to increase productivity and grow their economy … Unfortunately, I’d say that’s not what [U.S. policymakers] are doing. Most are focused on AI risk.”

“The executive order and the NTIA report I think were steps in the right direction. I think we can quibble about some of the details, but I think generally, the administration is thinking about it in roughly the right way,” said University of Alabama law professor Yonathan Arbel. AI technology is advancing at a “breathtaking” pace, Arbel said. That pace increases the potential of misuse at the same rate that it potentially improves lives.

Another line of discussion was over what types of regulation would be effective. Both Castro and Arbel discussed possibly focusing on the outcomes of AI misuse rather than setting strict standards on AI itself, which could be a disincentive to technology adoption. For example, it is legal to own a Bowie knife without strict standards on knife manufacturing and ownership. Liability or criminal penalties for an injury caused by a Bowie knife depends on the circumstances.

One example of this approach is the Ensuring Likeness Voice and Image Security (ELVIS) Act, which Tennessee Governor Bill Lee signed last week. The law bolsters existing state copyright protections by prohibiting AI for unauthorized use of a recording artist’s voice and image.